Today I wanted to show my team how we might be able to use one of the new features in SQL Server 2022. In particular, how to access data in an AWS S3 bucket directly from SQL Server running on my local laptop, using an AWS Workspace.

To start with I have configured the following:

PREREQUISITES

- AWS Account IAM User with an access key pair.

- AWS permissions configured on an S3 bucket.

- SQL Server 2022 installed.

- SQL Server 2022 Polybase features installed.

- TLS Certificate installed on SQL Server.

- Polybase enabled in sp_configure.

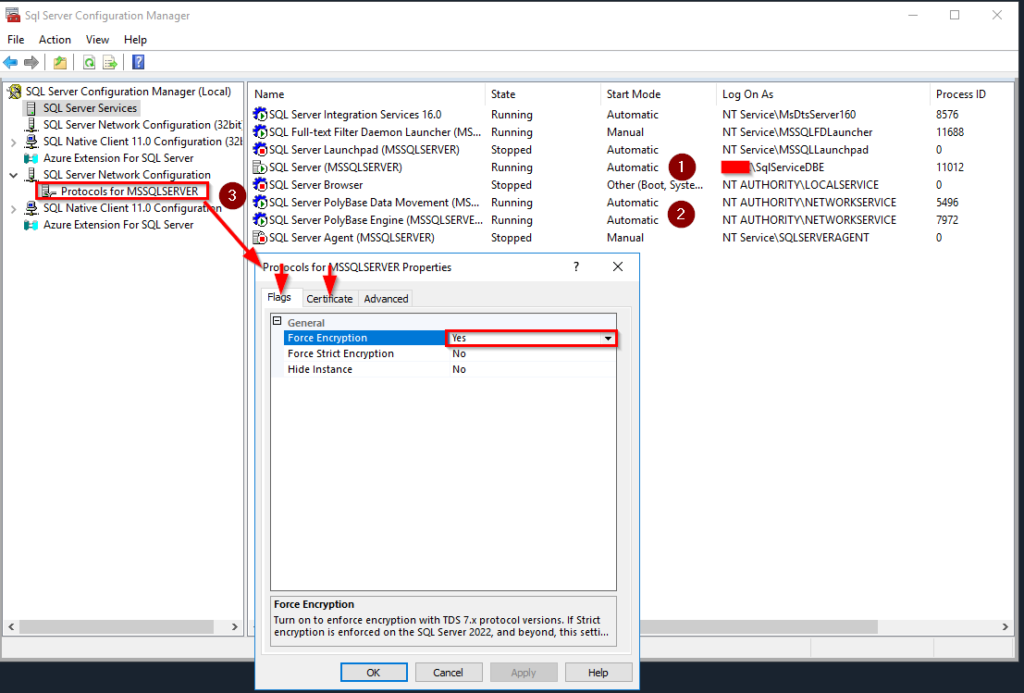

While the TLS certificate install is beyond the scope of this quick post, I can check to see if I have one in the SQL Server Configuration Manager. This will also let me know if Polybase features are installed and running.

(1) My SQL Server is running under a Domain Service account. (2) Polybase features are running, (3) I have a certificate installed and encryption set to forced.

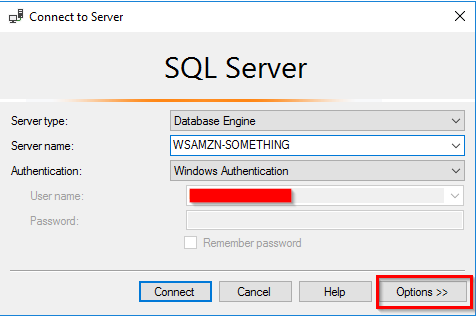

Next I will log into the server using SQL Server Management Studio (SSMS) Version 19.1 or greater.

Since this is the first time accessing the server, I will click the OPTIONS button.

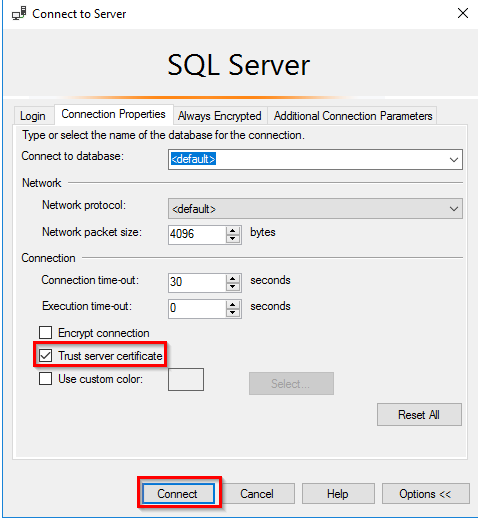

I will then select TRUST SERVER CERTIFICATE. And CONNECT.

OK – READY TO ROLL!

First to give credit. I will be using many of the steps presented in the Microsoft documentation found here:

Access external data: S3-compatible object storage – PolyBase – SQL Server | Microsoft Learn

I want to make sure I have Polybase enabled. Installing it does not enable it. This is the first step I will take. Here is the code I will run.

exec sp_configure @configname = 'polybase enabled', @configvalue = 1;

GO

RECONFIGURE

GO

exec sp_configure @configname = 'polybase enabled';

GOCREATE A TEST DATABASE

CREATE DATABASE PolybaseDemo;CREATE A SCOPED CREDENTIAL

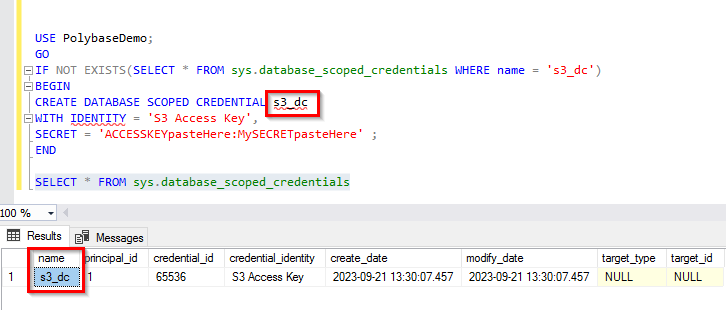

USE PolybaseDemo;

GO

IF NOT EXISTS(SELECT * FROM sys.database_scoped_credentials WHERE name = 's3_dc')

BEGIN

CREATE DATABASE SCOPED CREDENTIAL s3_dc

WITH IDENTITY = 'S3 Access Key',

SECRET = 'ACCESSKEYpasteHere:MySECRETpasteHere' ;

END

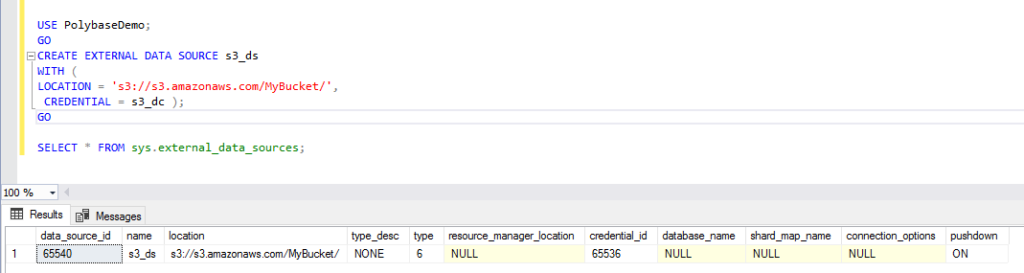

CREATE A DATA SOURCE

This will contain the Access key and Secret for my AWS IAM User. Following best practices, I am using a service account IAM USER that has read-only permissions on a specific bucket.

CREATE EXTERNAL DATA SOURCE s3_ds

WITH (

LOCATION = 's3://s3.amazonaws.com/MyBucket/',

CREDENTIAL = s3_dc );

GO

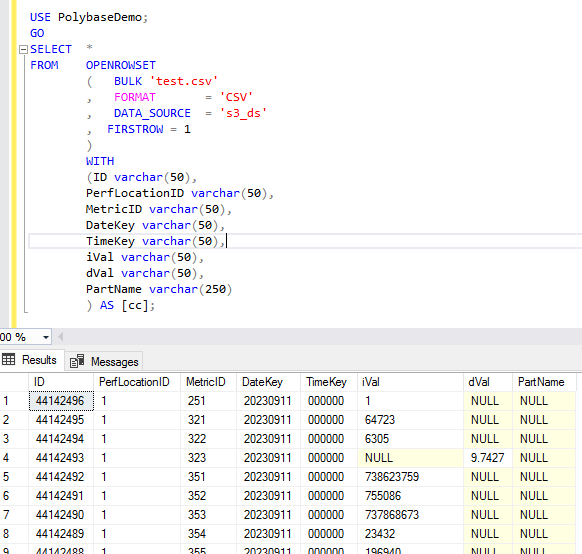

QUERY THE DATA DIRECTLY

I am now ready to bring in data from a test csv file that I have sitting in my AWS S3 bucket.

SELECT *

FROM OPENROWSET

( BULK 'test.csv'

, FORMAT = 'CSV'

, DATA_SOURCE = 's3_ds'

, FIRSTROW = 1

)

WITH

(ID varchar(50),

PerfLocationID varchar(50),

MetricID varchar(50),

DateKey varchar(50),

TimeKey varchar(50),

iVal varchar(50),

dVal varchar(50),

PartName varchar(250)

) AS [cc];

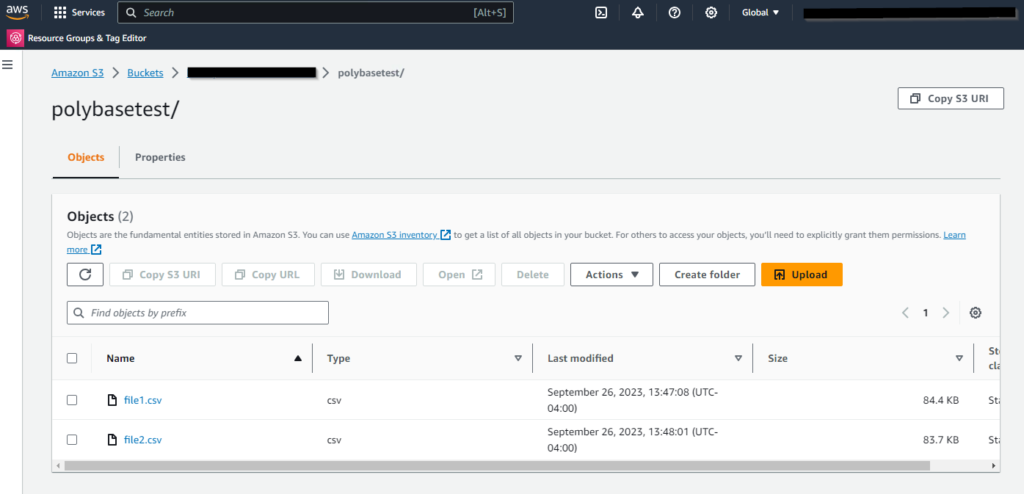

BULK FILES

No worries. I have set up a test bucket with two files that I want to import. This time though, I want to create a table on the fly to store the data from ALL the files in this bucket.

First, I need to define a FILEFORMAT my table can use to communicate with S3. Polybase has the ability to import images, pdfs, and all kinds of files. Keeping the demo simple I’m going for a CSV filetype.

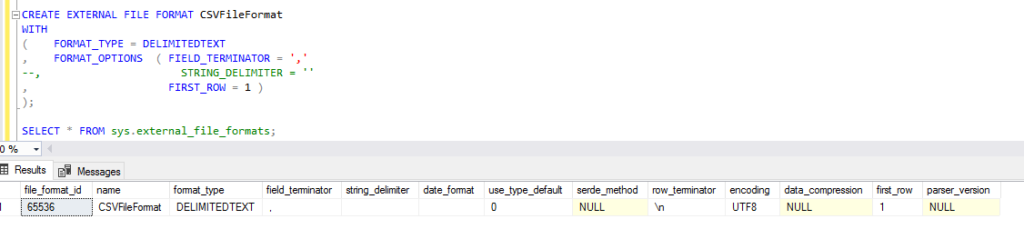

FILE FORMAT

CREATE EXTERNAL FILE FORMAT CSVFileFormat

WITH

( FORMAT_TYPE = DELIMITEDTEXT

, FORMAT_OPTIONS ( FIELD_TERMINATOR = ','

--, STRING_DELIMITER = ''

, FIRST_ROW = 1 )

);

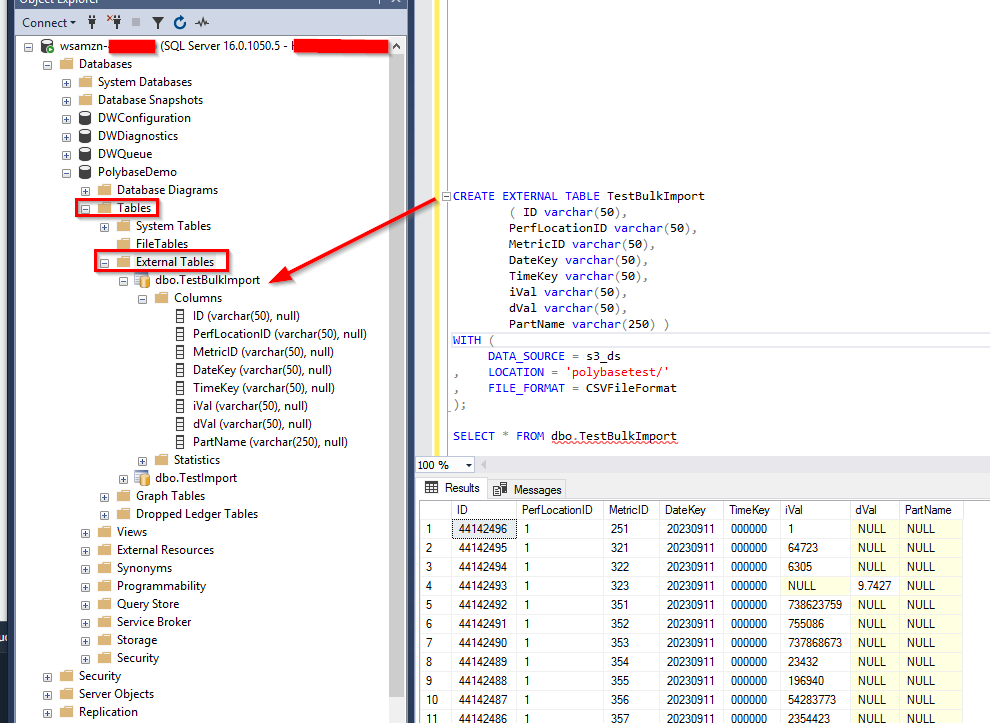

CREATE TABLE

Now I can create a new table, insert my multi-file data directly, and query it all in a single event.

CREATE EXTERNAL TABLE TestImport

( ID varchar(50),

PerfLocationID varchar(50),

MetricID varchar(50),

DateKey varchar(50),

TimeKey varchar(50),

iVal varchar(50),

dVal varchar(50),

PartName varchar(250) )

WITH (

DATA_SOURCE = s3_ds

, LOCATION = 'test.csv'

, FILE_FORMAT = CSVFileFormat

);

Notice that the imported table is located in the EXTERNAL TABLES folder.

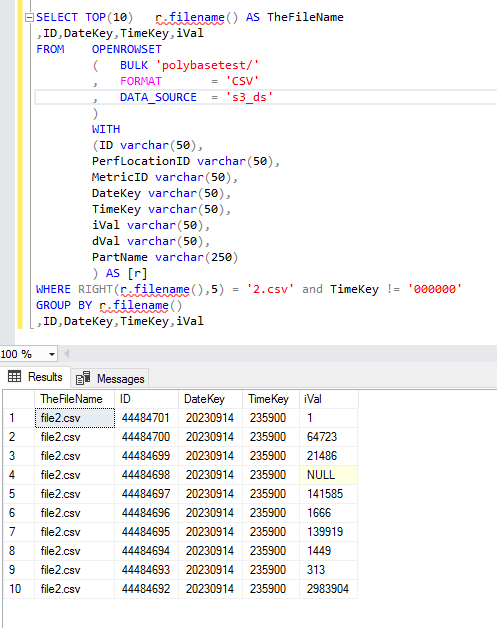

BONUS QUERY

Here is an example, using a WHERE clause to filter on the filename and data combined.

SELECT TOP(10) r.filename() AS TheFileName

,ID,DateKey,TimeKey,iVal

FROM OPENROWSET

( BULK 'polybasetest/'

, FORMAT = 'CSV'

, DATA_SOURCE = 's3_ds'

)

WITH

(ID varchar(50),

PerfLocationID varchar(50),

MetricID varchar(50),

DateKey varchar(50),

TimeKey varchar(50),

iVal varchar(50),

dVal varchar(50),

PartName varchar(250)

) AS [r]

WHERE RIGHT(r.filename(),5) = '2.csv' and TimeKey != '000000'

GROUP BY r.filename()

,ID,DateKey,TimeKey,iVal

That’s it for this quick demo. Looking forward to learning more about SQL SERVER 2022.